My key take home messages from this talk are:

- Big transition happening now from industrial robots to personal robots.

- Current generation android robots are disappointing: they look amazing but their AI - and hence their behaviour - falls far short of matching their appearance. I call this the brain-body mismatch problem. That's the bad news.

- The good news is that current research in personal human robot interaction (pHRI) is working on a whole range of problems that will contribute to future artificial companion robots.

- Taking as a model from SF, the robot butler Andrew in the movie Bicentennial Man, I outline capabilities that an artificial companion will need to have, and current research - some at the Bristol Robotics lab.

- Some of these capabilities are blindingly obvious: like safety, others less so, like gestural communication using body language. Most shocking perhaps is that an artificial companion will need to be self-aware, in order to be safe and trustworthy.

- A very big challenge will be putting all of these capabilities together, blending them seamlessly into one robot. This is one of the current Grand Challenges of robotics.

And here are my speaker notes, for each slide, together with links to the YouTube videos for the movie clips in the presentation. Many of these are longer clips, with commentary by our lab or project colleagues.

Slide 2

There are currently estimated as between 8 and 10 million robots in the world, but virtually none of these are ‘personal’ robots. Robots that provide us with companionship, or assistance if we’re frail or infirm. Or as helpmates in our workplace. This is odd, when we consider that the word 'robot' was first coined 90 years ago to refer to artificial humanoid person.

But there is a significant transition happening right now in robotics from robots like these - working by and large out of sight and out of mind in factories, or warehouses, servicing underwater oil wells, or exploring the surface of Mars, to robots working with us in the home or workplace.

What I want to do in this talk is outline the current state of play in artificial companions, and the problems that need to be solved before artificial companions become commonplace.

Slide 3

But first I want to ask what kind of robot companion you would like - taking as a cue robots from SF movies...? I would choose WALL-E!

Before I begin to outline the challenges of building an artificial companion you could trust, let me first turn to the question of robot intelligence.

Slide 4

How intelligent are intelligent robots - not SF robots, but real world robots.

Our intuition tells us that a cat is smarter than a crocodile, which is in turn smarter than a cockroach. So we have a kind of animal intelligence scale, Where would robots fit?

Of course this scale assumes 'intelligence' is one thing that animals, humans or robots have more or less of, which is quite wrong - but let's go with it to at least make an attempt to see where robots fit.

Slide 5

Humans are, of course, at the 'top' of this scale. We are, for the time being, the most 'intelligent' entities we know.

Slide 6

Here is a robot vacuum cleaner. Some of you may have one. Where would it fit?

I would say right at the bottom. It's perhaps about as smart as a single celled organism - like an amoeba.

Slide 7

This android, called an Actroid robot from University of Osaka, looks as if it should be pretty smart. Perhaps toward the right of this scale..?

But looks can be deceptive. This robot is - I would estimate - little smarter than your washing machine.

Slide 8

Actroid is an illustration of what I can the brain-body mismatch problem: we can build humanoid - android robots that look amazing, beautiful even, but their behaviours fall far far short of what we would expect from their appearance. We can build the bodies but not the brains - and its the problem of bridging this gap that I will focus on now.

But we should note - looking at the amazing Paro robot baby seal - that the brain-body mismatch problem is much less serious for zoomorphic robots. Robot pets. This is why robot pets are, right now, much more successful artificial companions than humanoid robots.

Slide 9

In order to give us a mental model of the kind of artificial companion robot we might be thinking about, let's choose Andrew, the butler robot from the movie Bicentennial Man. The model, perhaps, of an ideal companion robot.

Although I prefer the robotic 'early' Andrew in the movie, rather than the android Andrew that the robot becomes. I think that robots should look like robots, and not like people.

So what are the capabilities that Andrew, or a robot like Andrew, would need?

Slide 10

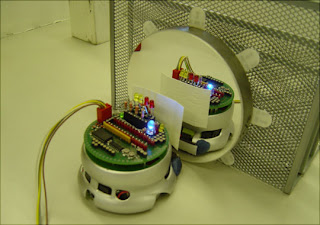

The first, I would strongly suggest, is that our robot need to be mobile and very, very safe. Safety, and how to guarantee it, is a major current research problem in human robot interaction, and here we see a current generation human assistive robot used in this research.

At this point I want to point out that almost all of the robots, and projects, I'm showing you now are right here in Bristol, at the Bristol Robotics Laboratory. A joint research lab of UWE, Bristol and the University of Bristol, and the largest robotic research lab in the UK.

Slide 11

Humans use body language, especially gesture, as a very important part of human-human communication, and so an effective robot companion needs to be able to understand and use human body language, or gestural communication. This is the Bristol Elumotion Robot Torso BERT, used for research in gestural communication.

Another important part of human-human communication is gaze. We unconsciously look to see where each others' eyes are looking, and look there too. On the right here we see the Bristol digital robot head used for research in human robot shared attention through gaze tracking.

This, and the next few slides, all represent research done as part of the EU funded CHRIS project, which stands for Cooperative Human Robot Interaction Systems. The BRL led the CHRIS project.

Video clip BERT explains its purpose.

Slide 12

A robot companion needs to be able to learn and recognise everyday objects, even reading the labels on those objects, as we see in the movie clips here.

Video clip BERT learns object names.

Slide 13

And of course our robot companion needs to be able to interact directly with humans, able to give objects, safely and naturally, to a human, and take objects from a human. This remain a difficult challenge - especially to assure the safety of these interactions - but here we see the iCub robot used in the CHRIS project for work in on this problem.

Also important, not just to grasping objects but for any possible direct interaction with humans, is touch sensitive hands and fingertips - and here we see parallel research in the BRL on touch sensitive fingertips.

I think a very good initial test of trust for a robot companion would be to hold out your hand for a handshake. If the robot is able to recognise what you mean by the gesture, and respond with its hand, then safely and gently shake your hand - then it would have taken the first step in earning your trust in its capabilities.

Video clip of iCub robot passing objects is part of the wonderful CHRIS project video: Cooperative Human Robot Interactive System - CHRIS Project - FP7 215805 - project web http://www.chrisfp7.eu

Slide 14

Let's now turn to emotional states. An artificial companion robot needs to be able to tell when you are angry, or upset, for instance, because it may well moderate its behaviour accordingly and ask - what's wrong? Humans use facial expression to express emotional states, and in this movie clip we see the android expressive robot head Jules pulling faces with research Peter Jaeckel.

If our robot companion had an android face (even though I'm not at all sure its necessary or a good idea) then it too would be able to express 'artificial' emotions, through facial expression.

Video clip Jules, an expressive android robot head is part of a YouTube video: Chris Melhuish, Director of the Bristol Robotics Laboratory discusses work in the area of human/robot interaction.

Slide 15

Finally I want to turn to perhaps the oddest looking robot in this roundup. Cronos, conceived and built in a project led by my old friend Owen Holland. Owen was a co-founder of the robotics lab at UWE, together with its current director Chris Melhuish, and myself.

Cronos is quite unlike the other humanoid robots we've seen because it was designed to be humanoid from the inside, not the outside. Owen Holland calls this approach 'anthropomimetic'.

Cronos is made from hand-sculpted 'bones' made from thermo-softening plastic, held together with elastic tendons and motors that act as muscles. As we can see in this move Cronos 'bounces' whenever it moves even just a part of its body. Cronos is light, soft and compliant. This makes Cronos very hard to control, but this is in fact the whole idea. Cronos was designed to test ideas on robots with internal models. Cronos has, inside itself, a computer simulation of itself. This means that Cronos can in a sense 'imagine' different moves and find ones that work best. It can learn to control its own body.

Cronos therefore has a degree of self-awareness, that most other humanoid robots don't have.

I think this is important because a robot with an internal model and therefore able to try out different moves in its computer simulation before enacting them for real, will be safer as a result. Paradoxically therefore I think that a level of self-awareness is needed for safer, and therefore more trustworthy robots.

Video clip ECCE Humanoid Robot presented by Hugo Gravato Marques

Slide 16

The various skills and capabilities I've outlined here are almost certainly not enough for our ideal artificial companion. But suppose we could build a robot that combines all of these technologies in a single body - I think we would have moved significantly closer to an artificial companion like Andrew.

Slide 17

Thankyou for listening and thank you for affording me the opportunity to talk about the work of the many amazing roboticists I have represented - I hope accurately - in this talk.

All of the images and movies in this presentation are believed to be copyright free. If any are not then please let me know and I will remove them.

Related blog posts:

On self-aware robots: Robot know thyself

60 years of asking Can Robots Think?

On Robot ethics: The Ethical Roboticist (lecture); Discussing Asimov's laws of robotics and a draft revision; Revising Asimov: the Ethical Roboticist

Could a robot have feelings?