This was a question that came up during a meeting of the Awareness project advisory board two weeks ago at Edinburgh Napier University. Awareness is a project bringing together researchers and projects interested in self-awareness in autonomic systems. In philosophy and psychology self-awareness refers to the ability of an animal to recognise itself as an individual, separate from other individuals and the environment. Self-awareness in humans is, arguably, synonymous with sentience. A few other animals, notably elephants, dolphins and some apes appear to demonstrate self-awareness. I think far more species may well experience self-awareness - but in ways that are impossible for us to discern.

In artificial systems it seems we need a new and broader definition of self-awareness - but what that definition is remains an open question. Defining artificial self-awareness as self-recognition assumes a very high level of cognition, equivalent to sentience perhaps. But we have no idea how to build sentient systems, which suggests we should not set the bar so high. And lower levels of self-awareness may be hugely useful* and interesting - as well as more achievable in the near-term.

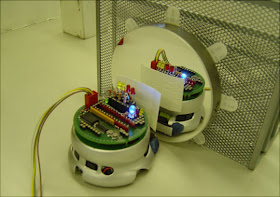

Let's start by thinking about what a minimally self-aware system would be like. Think of a robot able to monitor its own battery level. One could argue that, technically, that robot has some minimal self-awareness, but I think that to qualify as 'self-aware' the robot would also need some mechanism to react appropriately when its battery level falls below a certain level. In other words, a behaviour linked to its internal self-sensing. It could be as simple as switching on a battery-low warning LED, or as complex as suspending its current activity to go and find a battery charging station.

So this suggests a definition for minimal self-awareness:

A self-aware system is one that can monitor some internal property and react, with an appropriate behaviour, when that property changes.So how would we measure this kind of self-awareness? Well if we know the internal mechanism because we designed it), then it's trivial to declare the system as (minimally) self-aware. But what if we don't? Then we have to observe the system's behaviour and deduce that it must be self-aware; it must be reasonably safe to assume an animal visits the watering hole to drink because of some internal sensing of 'thirst'.

But it seems to me that we cannot invent some universal test for self-awareness that encompasses all self-aware systems, from the minimal to the sentient; a kind of universal mirror test. Of course the mirror test is itself unsatisfactory. For a start it only works for animals (or robots) with vision and - in the case of animals - with a reasonably unambiguous behavioural response that suggests "it's me!" recognition.

And it would be trivially easy to equip a robot with a camera and image processing software that compares the camera image with a (mirror) image of itself, then lights an LED, or makes a sound (or something) to indicate "that's me!" if there's a match. Put the robot in front of a mirror and the robot will signal "that's me!". Does that make the robot self-aware? This thought experiment shows why we should be sceptical about claims of robots that pass the mirror test (although some work in this direction is certainly interesting). It also demonstrates that, just as in the minimally self-aware robot case, we need to examine the internal mechanisms.

So where does this leave us? It seems to me that self-awareness is, like intelligence, not one thing that animals or robots have more or less of. And it follows, again like intelligence, there cannot be one test for self-awareness, either at the minimal or the sentient ends of the self-awareness spectrum.

Related posts:

Machine Intelligence: fake or real?

How Intelligent are Intelligent Robots?

Could a robot have feelings?

* In the comments below Andrey Pozhogin asks the question:What are the benefits of being a self-aware robot? Will it do its job better for selfish reasons?

A minimal level of self-awareness, illustrated by my example of a robot able to sense its own battery level and stop what it's doing to go and find a recharging station when the battery level drops below a certain level, has obvious utility. But what about higher levels of self-awareness? A robot that is able to sense that parts of itself are failing and either adapt its behaviour to compensate, or fail safely is clearly a robot we're likely to trust more than a robot with no such internal fault detection. In short, its a safer robot because of this self-awareness.

But these robots, able to respond appropriately to internal changes (to battery level, or faults) are still essentially reactive. A higher level of artificial self-awareness can be achieved by providing a robot with an internal model of itself. Having an internal model (which mirrors the status of the real robot as self-sensed, i.e. it's a continuously updating self-model) allows a level of predictive control. By running its self-model inside a simulation of its environment the robot can then try out different actions and test the likely outcomes of alternative actions. (As an aside, this robot would be a Popperian creature of Dennett's Tower of Generate and Test - see my blog post here.) By assessing the outcomes of each possible action for its safety the robot would be able to choose the action most likely to be the safest. A self-model represents, I think, a higher level of self-awareness with significant potential for greater safety and trustworthiness in autonomous robots.

To answer the 2nd part of Andrey's question, the robot would do its job better, not for selfish reasons - but for self-aware reasons.

(postscript added 4 July 2012)

"Put the robot in front of a mirror and the robot will signal "that's me!". Does that make the robot self-aware?" perfect question, as you mentioned, that's not self awareness.

ReplyDeleteone more thing, i am pretty much sure that you cannot make a robot self awareness by putting a new hardware, software or any specific algorithm. it's beyond and detached from all of the above.

it may sounds complex but i think (thinking) the answer is very simple

It's not detached... our self awareness is very much attached to our hardware. Our senses are required to report to our brain, all of which are hardware, in order for us to be self aware. Even 'feelling' yourself present is something that requires sensory input. Otherwise how would you be able to differentiate your own consciousness from random brain activity? This 'magical' separation of consciousness from physical reality has to disappear before anyone can even begin to understand self awareness. There must be something to be conscious, and it must be conscious of something, and that something must be more than just itself or it cannot identify concepts such as 'self' distinctly. There is no way around this. With regard to this line of thinking you are either part of the problem, or part of the solution. Those who do not assent to consciousness/awareness as being a physical issue are part of the problem and will only muddy the waters for the rest trying to reason about a solution.

ReplyDeleteSelf-awareness should not be something that some other self-aware entity has 'programmed' or implanted somehow.

ReplyDeleteChanging the definition of self awareness does not change the concept. It just makes the term confusing. If you need to broaden the term self aware to include robots then by definition they are not the same. conscious robots

ReplyDeleteI am new at posting comments. so please excuse me, but it would seem to me that they only way to prove the level of awareness of the robot, is to use ourselves as a focus point? So if the robot was to walk up to the mirror and turn left or turn right in attempt to recognize itself, such as what we do, then by our focus point, set by our standards, that being us, that robot, or being, would show some awareness right....?

ReplyDeleteThanks Saravana and Dave for your comments.

ReplyDeleteSaravana: what makes you think self-awareness cannot be programmed? I agree that a high level of self-awareness is *extremely* hard to programme, and it may be that we can't simply programme it, but instead build a robot that, for instance, develops its self-awareness - as you and I did when we were infants.

Dave: yes I agree 100%. That's why I think that artificial self-awareness, or machine consciousness, cannot be achieved without a body - i.e. in a robot. Self-awareness requires, in my view, embodiment.

Anon: "Self-awareness should not be something that some other self-aware entity has 'programmed' or implanted somehow."

ReplyDeleteWhy not? Are you saying that it would be unethical?

Thanks for your comment!

Thanks Eli for your comment - and your blog post in response. I have some sympathy with your view but the term 'self-awareness' is already used (and perhaps abused) in artificial systems research. The aim of my thinking, and blog post, is to go with the flow and try and clarify the situation - but with limited success perhaps;)

ReplyDeleteI shall comment in more detail on your blog.

@dave i agree, but i am in a different direction.

ReplyDeleteas you said, we need sensory circuits, neural network, motors, and central processing(brain) in order to feel ourself? (i doubt not!!). tell me what is happening when i am in sleeping, i am pretty much detached from my body, but still i live (in different context). when i am in sleep, i am ignoring my sensors.

when i wakeup, i am again attached with current context in these case with brain, my brain initialize data from memory.

if you ever went to unconscious state and return you might get idea of what i am talking, you data will be initialized much like pc booting up.

my point is, something which is the real reason for self awareness (true self).

That "something" cannot be simulated with present cpu architecture, definitely not with digital.

may be i could be wrong (i am just a kid).

@dave

ReplyDeleteYou make a point that self awareness is a result of hardware (Your eyes ears etc.) sending signals to your brain. That is how I understood it correct me if I’m wrong.

I'm afraid I disagree.

1. Is a person who was in a serious accident and looses all five of his senses completely and has zero communication with the outside world no longer self aware. I have no reason to believe so.

2. There is a fundamental difference between the way computers respond to their hardware than people. Let’s say we had a robot with a heat sensor that when it touched something hot would signal its processor who would then signal the motor of the hand to quickly pull its hand away not to get burned. That was a step process 1. Sensor senses heat 2. Sensor sends CPU signal 3. CPU signals motor 4. hand moves away.

If the same thing happend to a person it is a bit different. 1. Nerves sense heat 2. Nerve signals brain 3. Person feels a burning sensation 4. Person decides this is a bad feeling as it hurts and I don't enjoy the feeling of pain 5. Brain sends signal to hand muscles 6. Hand jumps away.

Notice the gap by 4 & 5 which only exist by people. The ability to experience pain and act based on that experience. No amount of transistors will create a computer that can experience pain. Transistors simply are not the type of place you would find pain.

Hence I disagree with your conclusion.

BTW nothing personal but I think its a little closed minded of you to believe that one who disagrees with you is part of the problem.

@Alan. I fully agree with your point that the word has been hijacked and have no complaints at all for your using it in that manner.

My point is when having this sort of discussion it is not the terms we should be focusing on rather we should be focusing on the concept we are trying to communicate.

As I already pointed out the fact that the term needs to be broadened or stretched means that there is some agreed upon difference between the 2 types of consciousness or self awareness. Therefore it does not make sense to argue further unless we agree what we mean when using the terms. If not you will say A and I will hear B. There will be lacking in the communication.

If you care to call the ability to detect low battery self aware I certainly can't disagree with that. Its a fact.

Your minimal definition of self-awareness seems trivially low since it can be satisfied by objects which are not programmable or capable of computation. Things like cuckoo clocks and Jiffy Pop shouldn't be considered self-aware.

ReplyDeleteI was told by a computer science lecturer you can program anything.

ReplyDeleteIsn't this correct if we ignore the size of the program required in some cases?

Maybe the programs needed for intelligence and self awareness are too large and complex for now but that doesn't mean it won't be possible at some point in the future.

I've been thinking about Eli's example about heat and feeling pain.

How do you even begin to program this?

It is a problem that would need a lot of analysing and is it really useful in a robot?

While it is an element of awareness, the lack of it in a robot doesn't necesarily mean the robot can't be aware on other levels.

What a the benefits of being a self-aware robot? Will it do its job better for selfish reasons?

ReplyDelete@gullygunyah

ReplyDeleteThat is exactly my point. Pain is not something that can be programmed which is why human consciousness will forever be different than any awareness a computer can have. I try to make this point here can robots experience? Some things can be translated into data, but not everything. You can't explain your experience to one who has never experienced. The point is human awareness goes beyond processing data. You cant process awareness.

The question is not whether a robot having pain has a purpose. It just proves that there is some fundamental difference. No amount of data processing or super large program will produce the experience we experience when we see red. Yer the processor can differentiate the difference between levels of redness, but it in no way can see it the way we do.

I'm not sure what you mean that a robot can be aware on other levels. Does that mean its aware like the light bulb is aware that the switch is on so it turns on? If that is the case then yes the robot can be aware just as a light can be aware that the switch is on. I would not call that awareness, but if you want to use that as your definition fine.

My dog is self-aware.

ReplyDeleteActually, anyone who limits themselves to being only physical beings has done just that - limited themselves. Why must you limit yourself to something that is itself incomprehensible? There are many people who have been aware outside of their bodies, and there is plenty of proof that they were not hallucinating, but too many scientists (I have a degree in physics) refuse to look at anything that does not fit their world-view. In spite of this, I am intrigued about the concept of a physical machine having self-awareness, and encourage you to keep playing, just don't automatically assume that physical reality is all that there is.

Eli there are many levels of awareness. There is awareness of other beings and objects, there is the recognition that you are thinking at a moment in time, or the feeling of success at a goal achieved, etc..

ReplyDeleteAs for the "feeling" you have with a particular colour of red, it could be induced by the combination of other sensory input and memory, particularly smell.

A lot of "feelings" are just chemical reactions in our brains.

@Anon: “I am new at posting comments…” Yes I see what you are getting at – but by taking a human-centric view of self-awareness I think we are closing off lots of interesting possibilities for robots that are self-aware, but not like humans. But you’re right that if we could build a robot that could react like a human when it sees itself in a mirror (without faking it – as I warn in my blog post) then yes we would have to call it self-aware.

ReplyDelete@Eli: yes I agree that we need to be very clear about what we mean by self-awareness, but surely that’s exactly the point of attempting define the term as unambiguously as possible. However, it occurs to me that what I’m trying to define is artificial self-awareness.

@Eric: yes true – but why should a thing necessarily need to have programming or computation in order to be minimally self-aware? At the same time I agree that a definition that admits a cuckoo clock to be self-aware probably needs tightening up.

@Gullygunyah: “…about heat and feeling pain. How do you even begin to program this?” Well since we’re interested in building artificial self-awareness, then any ‘artificial pain’ response in a robot will be necessarily not the same as the pain you feel. But suppose we built a robot with artificial skin, and embedded within that artificial skin are receptors that are sensitive to heat. Then we program the robot to recoil when any of those receptors are triggered. The robot would then behave as if it was in pain. I think this would be a useful response since otherwise the robot’s skin would easily get damaged (just like some desperately unfortunate humans who are born with a rare condition that means they don’t feel pain – who suffer terrible, often self-inflicted, injuries).

@Andrey. Really great question. I’ll answer this in a postscript to my blog.

@Eli: see also my response above to @Gullygunyah. I agree with you that if we were to build robots that can self-consciously experience the world – they will not experience the world in the way we do. Trying to imagine how a robot experiences the world would be just as impossible as trying to imagine how a beetle, a bat, or a dolphin experience the world.

@Anon: “My dog is self-aware”. Yes, I’m sure she is – but she probably doesn’t think about thinking in the way you do. So it’s arguably a different level of self-awareness.

I’m afraid I don’t agree with your suggestion that people can be aware of being out of their bodies. Although it’s true that people having an ‘out of the body’ experience feel as if they are out of their bodies, the overwhelming evidence is that it’s an illusion. See Susan Blackmore’s book ‘Beyond the Body’.

@gullygunyah I’m not really sure how you are defining awareness. Is a lightbulb aware that the switch is on?

ReplyDeleteYes there is awareness of other objects and there is awareness of feelings. I don’t know why you are calling these levels of awareness. Are one of these awarenesses greater than the other? I don’t see how. I would rather say awareness manifests itself in multiple ways, personal feelings and awareness of other objects.

As for your point about feelings, I don’t think it is relevant how it is induced as long as it is experienced.

@Alan. What is the fundamental difference between artificial self awareness and real self awareness. If I got your point you call artificial self aware as the ability to act on certain events. The truth is I really don’t like the term because the ability to react has little to do with awareness. My example is the lightbulb. I don’t think anyone is inclined to say that the lightbulb has a certain level of awareness because it turns on when the switch is turned on.

I am differentiating between reacting and awareness. Humans are aware reguardless if they are reacting therefore I don’t call the ability to react any form of awareness. Unless it decided to react. A human makes a conscious decision to react, but the lightbulb does not. I’m sure you are aware of the mechanics of CPUs and realize that robots don’t decide to act. Transistors are not capable of making decisions any more than the lightbulb decides to turn on. Transistors just react to currents of electrons.

I’m not sure if we are disagreeing at all. We might just be missing each other. I am having a little difficulty understanding the relationship between reaction and awareness as I pointed out. My problem is when you use the term artificial self awareness you are implying that there is some relationship between awareness and reacting. I can’t complain of your use of the term I just wish there was a better way.

You point out that we don’t experience the world the same way dolphins do and neither will we experience the way robots do.

First I would like to point out that we have no way of knowing if you and I experience the same way either. The only reason we understand each other when we speak about color is because we both know that roses are red (Usually) therefore when you say red you mean the same color as the rose. We don’t have a method of communicating the experience. Which is the reason a blind person can’t understand color. So when we say red we understand each other even though we have no way of knowing that our experiences are similar. Rather we use the redness of the rose as our point of reference.

Which boils down to my main point. Since robots are data processors and experience is not something that we can communicate to each other than I have reason to believe that experience can’t be converted to data. The logical conclusion should be that data processors have no way of experiencing. As there is no relationship between experience and data.

So the question isn’t whether we can experience the same way robots will. I am arguing that robots cannot experience at all.

I’m not sure my point about experience not being able to turn into data came across.

Try to describe to someone who never ate an apple what it taste like. I can’t do it.

You point out that the dog probably doesn’t think the way we do. I would not call that a different level of self awareness. They are both equally self aware. It just manifests itself differently.

@Davidson

ReplyDelete"1. Nerves sense heat 2. Nerve signals brain 3. Person feels a burning sensation 4. Person decides this is a bad feeling as it hurts and I don't enjoy the feeling of pain 5. Brain sends signal to hand muscles 6. Hand jumps away."

When humans sense excessive heat, it triggers a preprogrammed reflex. It's only (miliseconds) later that you (the human) realize that it hurts.

1. Nerves sense heat and send signal toward head.

2. Interneurons receive signal and send signal to yank hand away.

3. Hand moves away.

4. Brain receives the message and thinks "ouch".

http://en.wikipedia.org/wiki/Withdrawal_reflex

It is interesting to read what others feel the term self awareness means. From my perspective there appear to be a few kinds of self awareness with varying levels of sophistication, each of which has real world implications.

ReplyDeleteI would contend that, to be aware enough of your physical self to make sure you don't bump into anything else is one form of self awareness.

It is a boundary management approach that could be extended into dealing with virtual objects to avoid data corruption or inadvertent deletion of data.

It is another form of self awareness that allows questioning of the approach being used to handle the task at hand. To use a trivial example, getting from one end of a warehouse to the other quickly and without colliding with anything else and without having a predefined route to follow. Allowing the route to be dynamically altered in response to updated input with respect to it's own limitations.

(An obstruction has been encountered, is my existing route still the best way to get to my destination, am I able to fly over it, Can it be dodged by moving under rows or racks, Can I fit past it, Am I on rails,etc)

It is yet another form of self awareness that allows the observation of the effect the individual has on other individuals and objects. For example, noticing some objects and the other robots are smaller and bounce out of the way in a collision without causing it damage so it can take the shortest route only avoiding larger fixed objects and increase it's efficiency.

It is another kind of self awareness again, that notices the individual is part of a whole system. This allows the realisation that bouncing objects and other robots out of the way may improve the individuals efficiency but significantly degrades the performance of the warehouse as a whole and that this is unacceptable/sub-optimal.

Sadly this last type is not always achieved by people.

Hence houses with children have naughty corners and companies have HR departments.

Each of these types builds on the previous by combining it with observation and analysis of possibilities. No matter what the allowed level of sophistication of the processing or sensory hardware is, both are needed.

It is possible to program all of these things.

The challenge of AI is to use minimal programming and allow the system to "discover" each level in turn with minimal interference from the programmer.

There also appears to be confusion between *gaining* self awarenss and *having* self awareness.

To gain self awareness requires some sort of sensory input. Otherwise it is impossible to determine where the boundary of the "self" lies.

(Spend some time in a sensory deprivation tank. Without touching the sides or moving, try to determine your physical boundaries)

However, once this is done maintaining self awareness only requires a good memory.

While inside a sensory deprivation tank, you are still aware of the size of the world you live in and your size in comparison, you are still aware of the ways you and it can interact, you can still think and question the veracity of your thoughts.

One of the difficulties in this field is separating our personal sense of self from the problem of determining what it would mean to a machine. Because, as humans we either have some form of sensory input or we die too quickly to perform further research.

If somebody was born without the ability to touch, see, smell or hear, they would have no way of determining the external world or the boundary between it and them. Their universe would be their thoughts and vague senses of hunger, thirst and possibly a squishy sense of relief.

But they could still define a sense of self by thinking "I am not the hunger or the thirst. That is just something transient that happens to me. Therefore there must be me and something other than me."

I have to agree with Dave, to contribute to this field you must leave any pseudo religious ideas of conciousness and souls at the door.

Your mileage may vary.

@ben interesting point.

ReplyDeleteI still think there is a gap. As the reason the hand has the reflex is based on the memory of what pain feels like. So it is the pain that trained or programmed the reflex to begin with. Babies are not born with this.

Sorry, I didn't have time to read all the comments, so I don't know if anyone else has posted with the same sentiment.

ReplyDeleteI think that the definition of being minimally self-aware is completely incorrect. Your definition says -

"A self-aware system is one that can monitor some internal property and react, with an appropriate behaviour, when that property changes."

My smartphone makes a noise when its battery is running low. By the descriptions and definitions in this post, that would suggest that my smartphone were self-aware, which obviously it is not.

I think that a better description for being minimally self-aware would be to say -

"A self-aware system is one that can monitor some internal property and recognise what the future effect will be on itself when that property changes."

It is not necessary for the self-aware system to 'react with appropriate behaviour'. This would only be necessary for an outside observer to draw conclusions from. Although such a reaction would be probable, it is not necessary (e.g. the system may be self-aware and still choose to ignore the property change (we are not necessarily talking about a successful self-aware system)).

I recognise that my definition is much more difficult for an outside observer to draw conclusions from, but I think that it is why an error was made when stating your definition. Your definition seems to be motivated by a desire to be able to measure self-awareness. Whereas the true definition may remain unmeasurable.

When we say self aware are we concluding that self aware is also conscious? Are we getting spiritual even and saying self awareness is having a soul?

ReplyDeleteI hope not because that is not simplifying the problem.

Perhaps we need to clarify this before deciding whether we can measure self awareness.

Martin you make a very good point and the degree to which a system may calculate future effects may very well be what needs measuring.Unless of course we are concluding that self awareness = consciousness or that a robot should pray to it's God.

For the mirror test you could fake the robot recognising itself by purely image matching. But a robot that was self aware would perhaps pull faces or make moves based on it's prediction of what should occur as it looks at itself in the mirror. Can I dare to suggest this is self warenesss?

Also loved shed dwellers insights.

Hi Alan,

ReplyDeleteThe link to your blog cam from CodeProject daily news.

The question you pose is as interesting as the turing test for intelligence (didn't this just get found to be flawed?)

The iRobot floor cleaning robots, seek out their charging station when the battery is run down don't they, yet I would not

call them self-aware. It does have something to do with a event-response not being programmed into the machine.

Self awareness implies that the "being" be aware of something about itself by itself. Thus, if the system could learn that when the battery became depleted it needed to find a charging station, that would be self-awareness.

Hey hope all is well with you, it has been a while.

David Burgess

Is the roomba self-aware because it goes to its docking station when its battery runs out? A decade ago, most non-tech ppl would have been impressed by this, and ascribed human qualities to it. Nowadays it's just a smart technical achievement, not considered to be extraordinary anymore.

ReplyDeleteWhich gets me to think that we're in a bad position to define awareness as of now, because we don't yet know what awareness is not.

OTOH, if we think of awareness in human terms, it's the capability to build a mental model of the environment we're part of, to see ourselves in context, whether it's the immediate physical context, a broader physical context such as the planet's ecosystem, or a non-physical context, such as a political or social context. Maybe this opens up the possibility of another definition?

Totally agree with Alan

ReplyDelete